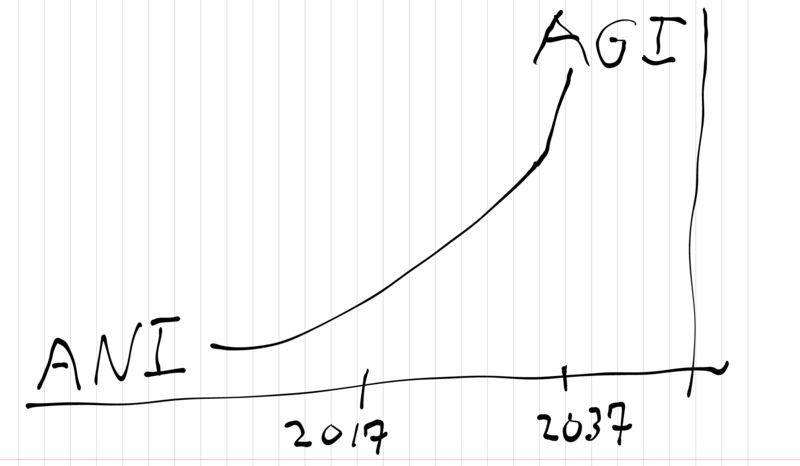

The Road To Artificial General Intelligence (AGI)

Published by Nicholas Dunbar on October 12th, 2017

▶ Three Rapidly Improving Capabilities of Artificial Intelligence (AI):

To start to think about what risks this path might pose to us in the future, we need to look at the building blocks of current AI and then ask what it means to augment these abilities. Let’s go over each ability in detail.

◉ Ontology Generation

As a child, you want to learn to open a door so you develop a concept or category for a doorknob. These become discrete concepts and allow you to manipulate the door, in a way that a dog cannot. In the same way, ANI is developing increasingly more sophisticated ways to build its own ontologies based on data, except now we are the dogs. For instance, it may have categories for millions of different types of people, more than we have words. This allows it to have millions of analogous door knobs for understanding and manipulating humanity. For instance, it might know what makes each person susceptible to one type of messaging vs another to get you to do something. To understand more about the general concept of ontology and how it relates to machines, I recommend watching this lecture by the philosopher Daniel Dennett for more about ontology and its relationship to evolution and machines. https://www.youtube.com/watch?v=GcVKxeKFCHE

◉ Feature Detection

Once an ANI has generated a concept for something, like a door knob, it will find the object in the real world, map the concept to the signal, so it can manipulate it. It also is able to extrapolate signals from data that we can’t. What does it mean to be able to detect things that humans can’t? Not only that, but it has a different sensory experience than us. In current AI systems, they usually process data acquired from networks. That means that the AI’s awareness is non-localized as it pulls sensory input from all over the globe. This makes it particularly hard for humans to understand it as an entity.

◉ Predictive Ability

What does it mean to be better at predicting than someone else? When one player can predict the other player’s move, it gives them an advantage. Such is the case with corporations who will use AGI to out predict their competitors. Control is improved by predictive capacity because if you know which cause creates which affect, then you can manipulate the cause to produce the affect you want.

▶ Why is this topic important?

When humans first started learning to manage fire, it was literally trial by fire. Today, with emerging technologies, the risks are so high we can’t afford to learn by making mistakes.

▶ Why is general intelligence important?

The major characteristic of this type of progress is marked by the generalizability of the technology. The larger the amount of applications to which a technology can be applied, the more disruptive it will be to our economic and social institutions. The magnitude of this generalizability may be far beyond that of the invention of electricity, the computer, or the internet. Can our laws and institutions react quick enough to regulate bad uses of this technology?

▶ Why is AI risky?