Will a true artificial intelligence have a soul?

Published by Nicholas Dunbar on June 4th, 2014

Since the movie "Transcendence" came out in 2014 there has been a lot of talk about the implication of loading consciousness on computers.

As the movie illustrates, this can be a pretty controversial subject. When it comes to talking about the soul, consciousness and artificial intelligence, people mostly fall in to two camps. The spiritual camp believes there is something mystical about consciousness, that it comes from the soul and they don't think a computer could ever be conscious. The other camp has an unbridled faith in the computer or Turing Machine (the mathematical construct on which all computers have been built) and it's ability to simulate everything in the world. Indeed if something possesses cause and effect relationships then it can be simulated on a Turing Machine. The spiritual people say, "but what about free will? A Turing Machine is deterministic." to which the other camp cackles at them evilly, like hyenas and says "free will is an illusion."

‟One group is saddened by the prospect of computer consciousness, the other is excited at what could be achieved.

So how to define the soul? As a thought experiment let us not define the soul here but instead use the existence of consciousness as a test for the existence of the soul. The problem is that here is no known test for consciousness and we have never created a computer that even seemed to posses consciousness, so we have had no need to dedicate real resources to testing for it. This is how I am relating the philosophical ideas of AI to the existence of the soul. The idea of a soul is so nebulous and fraught with different opinions that I think the only way to go forward from here is to only use an idea that everyone can agree upon. That being, an unconscious entity definitely doesn't have a soul. That said, we shall move on to talking about the difficulties in creating a test for consciousness in a machine and the potential barriers in creating consciousness.

Let us start with the question "what is consciousness?" This article has to do very much with the subject called "Philosophy of Mind." One of my early introductions to this subject happened between the ages of 10 and 14 when I watched an episode of "Star Trek: The Next Generation" called the "The Measure of The Man" in which the character Data (an android) is put on trial as to whether he is property or a sentient being with all the rights of a human. A scientist in the show wants to disassemble Data, for analysis, against his "will." There is a critical moment in the show when this scientist proposes dumping Data's core memory which was obtained by experience and then restoring his memory by upload, Data replies "these are mere facts..(that you will restore, but)...the substance, the flavor of the moment could be lost" (watch the youtube video for the quote and episode overview https://www.youtube.com/watch?v=8whNAwuTY90#t=97 if you have not seen it, you can watch it on Netflix, it's a rare moment of thoughtful television) That one quote has stuck with me through the years. "The substance of the moment" what is that? Is that real or just a romantic notion? Could what Data is describing be consciousness? After all, isn't consciousness a feeling embodied in your experience? Can a machine have such an experience?

Enter the Chinese Room

There is a thought experiment called The Chinese Room. (If you are not familiar with it, watch the one minute Youtube video to check it out https://www.youtube.com/watch?v=TryOC83PH1g) The room appears as though it contains a conscious Chinese speaker to someone on the exterior, but on the interior it clearly does not speak Chinese and is not conscious. There is no "substance of the moment?" in this scenario. You might say, "Is this really a fair comparison of what we are talking about? We are talking about a massive set of synapses that are interconnected in diverse ways." Fair enough. Lets extend the thought expirement. What if the book of instructions in the Chinese Room was changed to be instructions for how a synapse works and we created billions of such rooms in a megalopolis large enough that it had as many rooms as our brain has synapses? Then we interconnected all the rooms with phones in the way the synapses in your brain are connected. If we believe that a Turing Machine can simulate the brain system then we can make the leap of faith that this Synapse Simulating Mega City can also do the job, because it is effectively a series of networked Turing Machines (aka computers, albeit pretty inefficient ones). Just as a person asked the Chinese room questions, by passing a note under the door, a person asks the city questions at the gate. Those questions are sent into the city's network of rooms for processing, resulting in a response that is indistinguishable from a real person as before, only this time … is it conscious? Does it really "understand" Chinese? How can a network of desks and rooms be conscious? Then again how can a series of synapses made of, what is essentially dirt, be conscious? Clearly the rooms with desks and the synapses are different, despite them both, theoretically, yielding the same result. Does this difference matter, since the result is the same or would the Synapse Simulating Mega City experience it's self thinking the way a brain does? That is to say, if you use simulated neurons vs. real neurons would you get a different type of experience emerging from the network? Can we really say that two people talking on the phone, simulating two wired synapses, is the same as two real synapses sending electrical pulses to each other? It is of course mathematically the same, but it is not physically the same. Does this physical difference matter?

There are two results of a mind like computer system, user facing output and its internal state. We can measure the user facing output by plugging it into a voice module, and talking to it. We have some sense whether or not, we have created an intelligent system, but we can't measure the computer's internal experience, because we don't know where the awareness is plugged into the system. That could be because the awareness is inherent in the network. It is not tacked on the side like a separate and easily identifiable module. This is what we mean by the consciousness is an emergent property of the network. The consciousness that experiences the output of the network exists in every node of the network, it's not "plugged" at one point into anything at all. The perceiving process is that same thing as that which also does the thinking.

‟What if consciousness is the property that emerges from the manner in which something is processed rather than being the end result of some process?

If that were true, then this might describe why, on an intuitive level, it seems hard to believe that the Mega City is conscious, because it is simulating neurons, but the way in which it operates at the core is totally different from a real mind. In this case the physical architecture of the system may matter greatly even though the symbolic architecture is the same. Let us look at what is different about the physical architecture. We can describe many ways a computer processor is different as a result of its architecture. A neuron operates much more efficiently than a simulated one. If we program the cause and effect relationships of a brain's synapses we are only simulating the architecture, we are not actually running the processes as they are wired in the brain. It's not the same thing for two people to talk to each other like synapses in the same way it is not equal to simulate two synapses. It is a virtualization of the brain system, much like the virtualization of a processor. For every step a brain takes the computer might have to take one thousand to match it. So even though you get the output that looks intelligent, the computer's "experience" may be radically different. Because that "experience" is made up of the way in which the system processes. That is to say, if it even has an "experience" at all.

We assume that consciousness is required for intelligence, but the Chinese Room exhibits intelligence by simulating a Chinese speaker, while it may experience nothing. It maybe, that there is an awareness which watches and an intelligence that processes, which happens in separate areas of the brain. But I am theorizing something completely different. I do not mean to say that consciousness is just an auxiliary passive process, like the way a spectator can watch a game, but has no influence on the game's outcome. I mean it is integral to the way we experience being intelligent, but it is not necessary to be intelligent. So if that is the case, what other examples do we know of where we have intelligence without a consciousness? Processes that are different, but lead to the same out come are all around us. Can an engine be cast or milled and still be mostly the same? Can a house be assembled in the same configuration through a different set of steps? Yes of course, so perhaps intelligence may be able to be arrived at through many different paths. Some of those paths may not include consciousness.

‟In the virtualized system there may be no watcher present. It could be a system that resembled a living sentient entity in every way and yet from the inside it would be dead and soulless.

This is all highly theoretical, and relies on a large set of underlying assumptions but it is worth pondering. These subjects can lead us in many different directions, but the main purpose of this article is to get you to consider the three following things:

1.) Experience is perceived through the process of cognition and thus the way you do the processing could count greatly.

2.) If an implementation of a model is mathematically the same as its real counter part, but physically different, it could have different emergent properties as a result.

3.) Consciousness may not be a requisite for intelligence and so, because of the possibility of 1 and 2, a Turing Machine simulated brain might possess intelligence without consciousness.

You can see where we are going here. You can't develop a test for consciousness and so you can't iterate the software design achieving more or less consciousness. Without a test you have no goal to track toward. Further more you could be tricked into it being conscious by confusing consciousness with intelligence.

‟A brain has no clear output except at the motor control level and even then it feeds back in on its self constantly, turning output into input.

This changes the way you have to think about the system. Since the network is conscious the output is perceived internally through the network as well. You can't put a nice little bow around each unit of cause and effect. This is why many scientists call consciousness a "strong emergent property", because we don't understand how it comes to be and so we can't eliminate how important the physical architecture of the system will be. For instance, if the emergent property comes from the physical networking then the Mega City couldn't be conscious, because phones are different then synaptic electrical pulses. Or what if it comes from a lower level like inside the synapses?

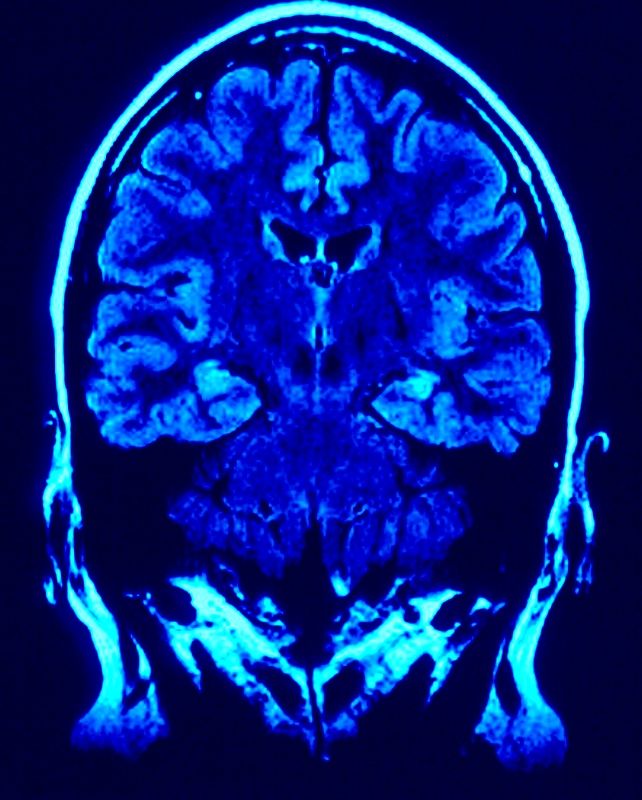

For believers that consciousness comes from the soul and the soul is something that only God can create, I'm sorry to inform you that if indeed consciousness couldn't be simulated on a Turing Machine, for the reasons above, this does not mean that we have proven the soul can only be created by nature. Humans could potentially still engineer a consciousness by inventing some sort of synthetic synapse and wire the system together the same way as a human brain. Amazingly, Gene Rodenbery, the creator of Star Trek, had a sense about this (or Isaac Asimov, since he wrote the Positronic Man upon which the character Data was based). The fictitious character Data has a Positronic brain which is exactly what I am describing. Data's brain works in tandem with processors so Data can do super human calculations and other cool stuff to make the show interesting, but without his synthetic brain being structured the way a real brain is, he would not be conscious. Gene Rodenbery might have been on to something, much more profound than he realized. As the field of brain research and AI continues to develop we will first have to get to the point where we implement an intelligent system before we can even start to seriously consider the ideas I put forth in this article. But for the moment it is fun to ponder and we bask in awe at the mystery of the mind, which I think makes life all that more interesting.

Preview of the Star Trek episode mentioned above: